Download our whitepaper

It will explain more about Computer-Generated Holography and why it represents the future of AR wearables.

We use Mailchimp as our marketing platform. By clicking below to subscribe, you acknowledge that your information will be transferred to Mailchimp for processing. Learn more about Mailchimp's privacy practices here.

Chief Scientist presents VividQ's Advances in Hardware Design for AR Wearables at FiO LS

VividQ has long been the world leader in algorithm development for Computer-Generated Holography. Our Software Development Kit powers a range of applications from automotive Head-Up Displays to gaming headsets manufactured by OEMs worldwide. Over the years, our technical teams have also generated a range of licensable hardware IP by prototyping groundbreaking optical designs to test the capabilities of our software in-house.

Last November, Chief Scientist Andrzej Kaczorowski presented VividQ’s research and development journey in prototyping optical systems for Augmented Reality (AR) wearables at the Optica Frontiers in Optics and Laser Science (FiO LS) conference. We are proud to have received the invitation for the talk from industry experts Douglas Lanman, Director of Display Systems Research at Facebook Reality Labs and Kaan Aksit, Associate Professor at University College London.

It all starts with the software

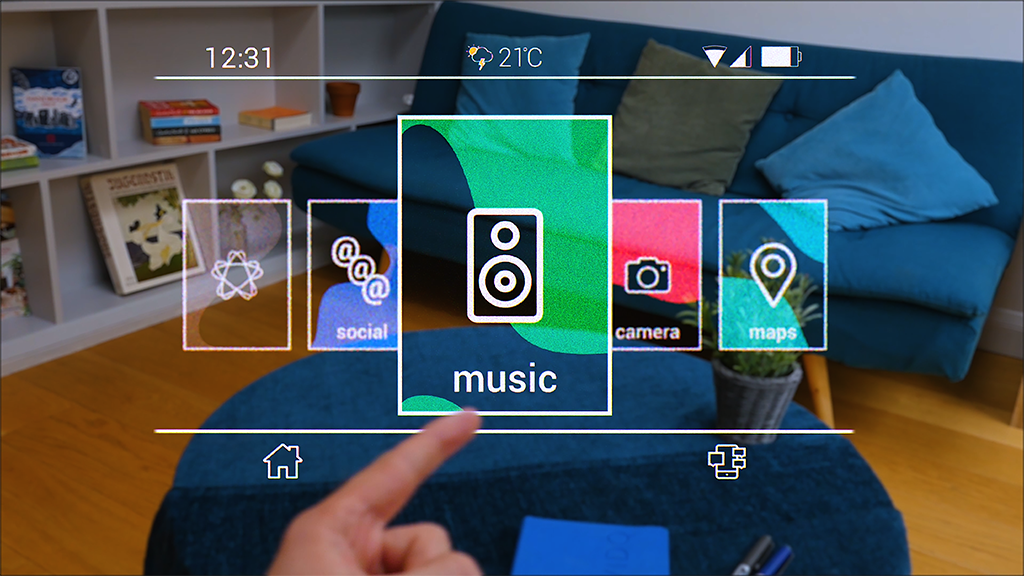

While Computer-Generated Holography (CGH) has long been considered the pinnacle display technology, before 2017, there had been no viable pipeline for consuming and processing 3D content for holographic display. Although most computer games have contained all necessary data about distances between the camera and the objects, all this information was discarded before the image was even displayed on the screen. VividQ’s SDK addressed this problem, offering an easy-to-use unified pipeline for rendering holograms in real-time, on off-the-shelf display elements, from a range of content sources, including the most popular game engines. From then on, we have set out on a journey to create the most advanced AR devices in the world, supporting the development efforts of our partners and customers while pushing the boundaries of innovation in our VividQ labs at the same time.

The hardware prototypes that made it all possible

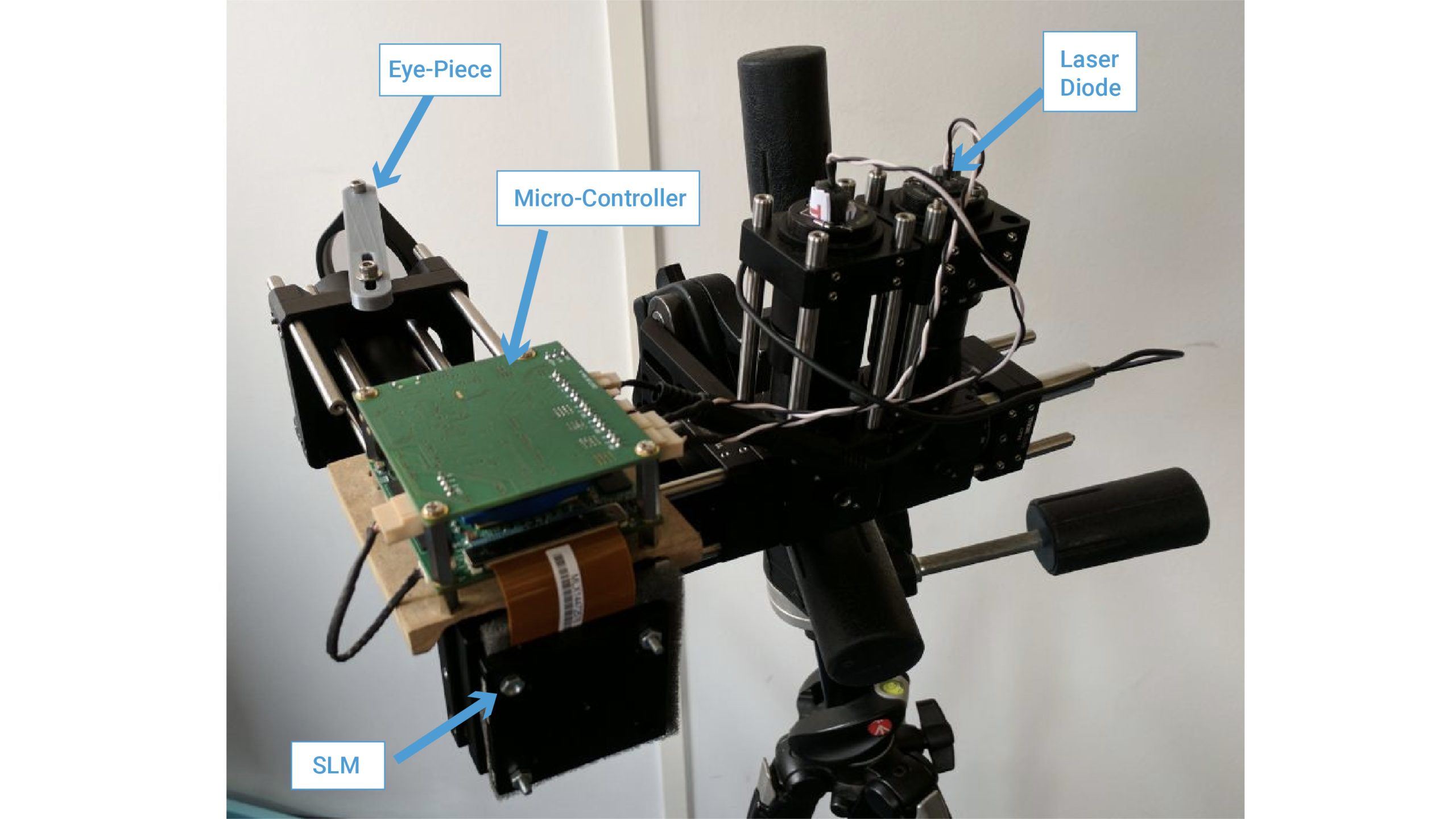

The first demonstrator of our SDK capabilities for real-time rendering of holograms was built entirely from off-the-shelf components, bolted onto a tripod. The optical setup included three laser diodes (Red, Green and Blue) to produce full-colour images, a ForthDD QXGA Spatial Light Modulator (a device that controllably modulates the laser light), and a microcontroller to synchronise these two. While still quite basic, this demonstrator allowed us to record the famous video, where a pink elephant was projected onto a beam-splitter, creating a true augmented reality experience with holography. This innovation started the VividQ journey towards the ultimate AR wearable device.

The optical set-up previously presented on a tripod was swiftly packaged into a more ergonomic form factor: a monocular wearable device. VividQ Headset Prototype V1 was unveiled at AWE Europe 2019 and travelled worldwide, demonstrating the advantages of Computer-Generated Holography in AR devices.

The feedback we gathered with Headset V1 on SDK performance and the optical requirements to create the best AR experience set our roadmap for the next-generation device. In 2020 we made major advances in algorithms for CGH, enhancing the image quality of holographic projections while maintaining real-time performance. In addition, our expanding expertise in hardware design resulted in a patentable design for a miniaturised optical engine, incorporating the smallest-of-its-kind LCoS (liquid crystal on silicon) Spatial Light Modulator from Compound Photonics. These developments were demonstrated as part of a binocular Headset Prototype V2, unveiled at SPIE AR|VR|MR conference in 2020 in San Francisco. With binocular viewing, precise eye box placement and interpupillary distance (IPD) adjustments, this prototype was a major step-change in the quality of AR experiences, powered by VividQ’s SDK.

What's the next big thing?

Headset Prototypes V1 and V2 were a big step forward in AR device development with Computer-Generated Holography, using phase LCoS Spatial Light Modulators as display elements. While likely to be the ultimate winner for AR wearables, phase LCoS is still a nascent technology. Its amplitude-based counterparts, on the other hand, are way more mature. Amplitude LCoS, particularly DMDs (Digital Micromirror Display), are relatively low cost, highly available, and already used across many AR applications. However, CGH on DMDs have yielded undesirable visual results in the past. VividQ has taken up a challenge to address this, developing a new algorithm approach that generates exceptionally high-quality holograms on amplitude LCoS. VividQ Hardware Development Kits including this invention (licensable IP and optical engine designs) will be released soon, bringing AR devices much closer to a near term consumer application with Computer-Generated Holography.To learn more about our recent developments in CGH and headset prototypes, please email us at holo@vividq.com.Watch Andrzej's presentation at the Optica FiO LS event here:

.svg)