Download our whitepaper

It will explain more about Computer-Generated Holography and why it represents the future of AR wearables.

We use Mailchimp as our marketing platform. By clicking below to subscribe, you acknowledge that your information will be transferred to Mailchimp for processing. Learn more about Mailchimp's privacy practices here.

VividQ Research on Media Production Workflow for Computer-Generated Holography Wins SMPTE Award

VividQ continuously supports the best research in holographic display by hosting a team of postgraduate students, led by the company’s Co-Founder and Chief Scientist, Dr Andrzej Kaczorowski.

Aaron Demolder, VividQ Research Engineer and EngD Candidate, has won the award for Best Student Paper by the prestigious Society of Motion Picture and Television Engineers (SMPTE). The Oscar® and Emmy® award-winning association, which includes Disney, Dolby, Google, Netflix and Apple amongst its members, is a global society of media professionals, technologists and engineers from the media and entertainment industry.

Aaron’s research paper Towards the Standardization of High-Quality Computer-Generated Holography Media Production Workflow was first published in the Jan/Feb 2022 SMPTE Motion Imaging Journal.

Computer-Generated Holography for Media and Entertainment

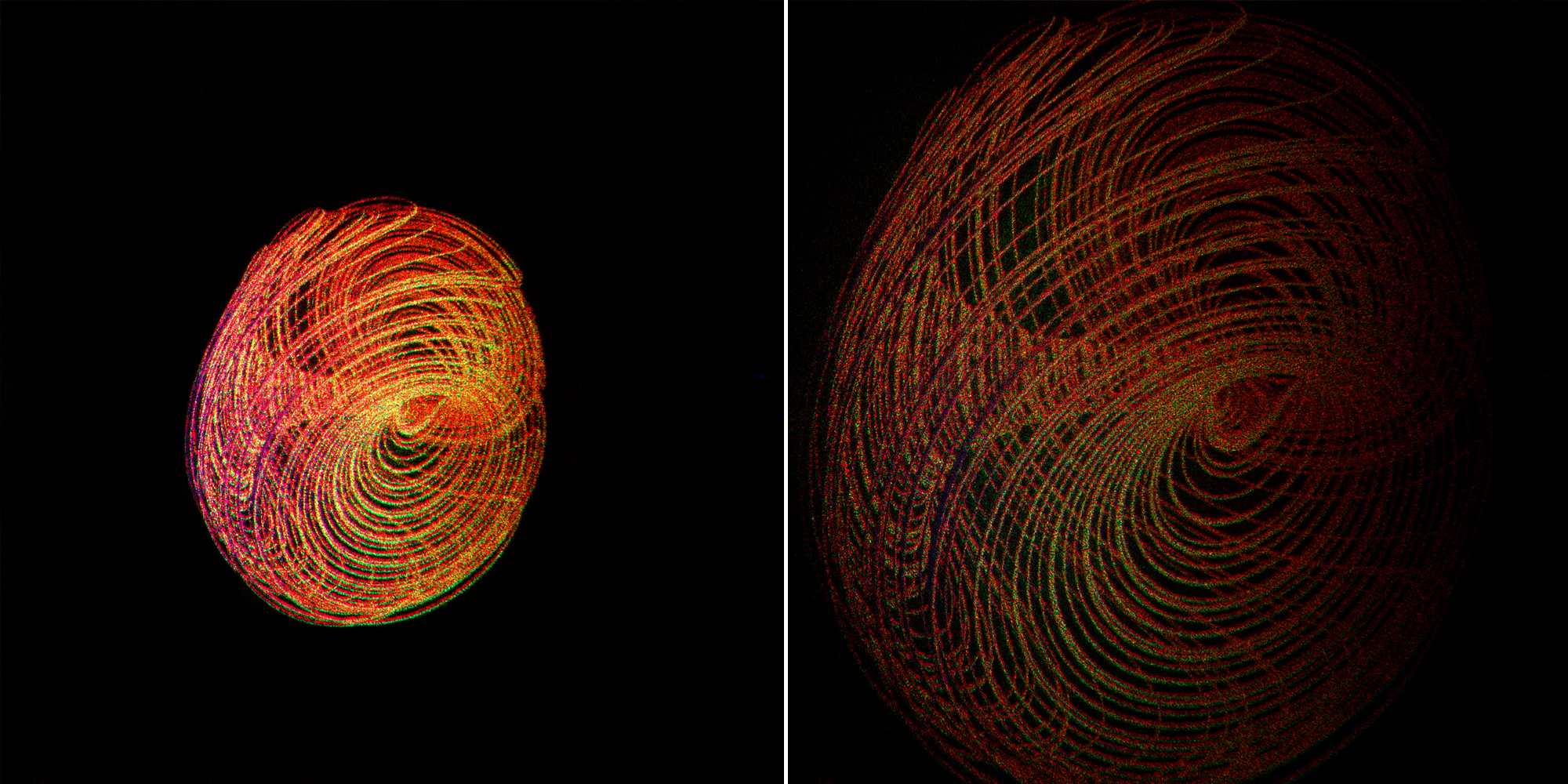

Computer-Generated Holography (CGH) is an immersive next-generation display technology with exciting prospects for television and cinema. By modulating laser light via a spatial light modulator (SLM), a holographic display can project a three-dimensional scene into the viewer’s eyes. The resulting holographic image is the closest to a real-world scene representation where physiological and psychological depth cues are preserved.

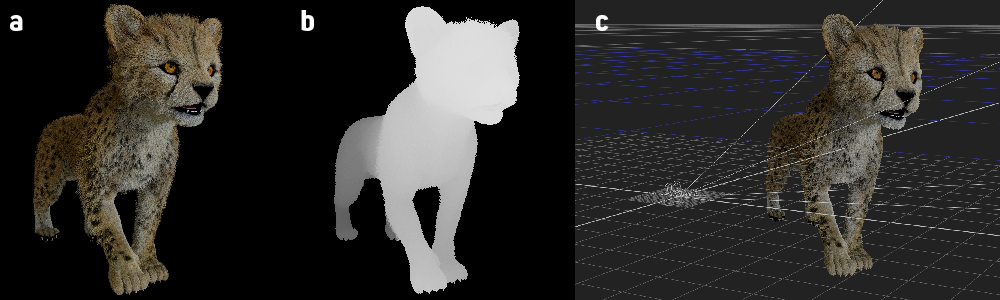

CGH uses three-dimensional data sources as input - such as renderers, depth-sensing cameras or depth synthesis from stereo/mono video. There are numerous techniques for processing 3D data for display. However, there is currently no established workflow for delivering this required data to the hologram generation software suite in practice.

In existing high-end cinema workflows, artists combine two-dimensional backplate footage with three-dimensional CGI content, where it is then delivered in 2D. The content can then be displayed on 2D display devices, via streaming service or cinema projection. However, the critical difference for holographic display is that all of this data is required to still exist in 3D, delivered from the VFX process alongside additional data to create high quality, immersive 3D content.

This research paper proposes a new high-quality hologram (HQH) standard that establishes the rules for developing content for holographic display. The HQH format aims to contain all the data required to deliver a rich holographic experience while ensuring compatibility when integrated with existing content production methods.

To create a successful display, the HQH workflow will provide:

Data Representation in Real 3D

In VFX, depth data - representing the distance from the camera to the CGI object - is produced during the rendering process. Depth data is useful for placing objects in 3D space and simulating depth of field during one of the final VFX steps, compositing. But this data is discarded for delivery as it is not required for 2D display. Instead, CGH uses this data to create holographic images that are truly three-dimensional.

Large-scale holographic displays, such as VividQ’s Holographic LCD concept, naturally present multiple views of a scene. Holographic displays of this nature allow the possibility for the viewer to look around scenes or behind virtual objects from many perspectives, or for multiple viewers to see slightly different views of the same scene. In addition to the support for multiple views is the need to support multiple colour and depth values per pixel. The proposed HQH system also enables more complicated viewing situations, as detailed in Aaron’s previous research on reflective and refractive materials for holographic display. This method grants users the ability to focus on specific objects in a virtual scene - across multiple depth planes - without hindrance.

High-Dynamic Range Handling

High-dynamic range (HDR) is a crucial element of lifelike display. In holographic display, most real-world luminance can be reached with laser illumination from the display, reaching peaks exceeding 300,000 nits. By comparison, existing consumer HDR displays may peak at only 5000 nits, with many other limitations due to large power consumption. Lasers are typically used as a light source in CGH due to their high ‘coherence’. A laser light source provides a single wavelength, which provides accurate interference and a good recreation of the desired light pattern.

For a proposed holographic HDR display, the metadata required to be presented to the holographic generation software suite will result in image data values directly correlating to light values. This means that holographic HDR display will be able to present a full scene-referred content experience for the first time. “Scene-referred” is where the light values captured in-camera or generated by the renderer, are untampered with - so a scene-referred display means that it can display real-world light values, literally referring to the original scene. This provides a far more realistic viewing experience compared to the current displays on the market, which operate within a much more limited range of brightness.

Wide Colour Gamut Handling

One of the most important parts of any display is its ability to reproduce colour. To create impressive, realistic images, the HQH standard proposes a colour handling system that allows support of the latest work and research in colour reproduction to make the most of the pure single wavelength laser sources that the display is composed of. A key development in recent years in cinema has been the adoption of the Academy Color Encoding System (ACES), which makes the latest films and games look better than ever. Support for ACES is included in this standard. Also included is support for a display that allows any combination of laser wavelengths and varying input colour spaces - which ensures existing content can be displayed as originally intended with varying hardware and ensures that future content looks as realistic as possible.

This research was supported by the Centre for Digital Entertainment at Bournemouth University. VividQ supports joint research projects with leading universities and best-in-class research in optics and electronics. Want to join us? Visit our Careers page to see our open roles.

.svg)